Softmax function from scratch

Softmax is a generalization of logistic regression which can be use for multi-class classification. The softmax function squashes the outputs of each unit to be between 0 and 1, just like a sigmoid function. But it also divides each output such that the total sum of the outputs is equal to 1.

Softmax Function :-

Softmax is a generalization of logistic regression which can be use for multi-class classification.

You can refer below video to get understanding of softmax function.

Softmax using Vectorized Form :-

library(testit)

softmax <- function(k){

testit::assert(is.numeric(k))

return(exp(k)/sum(exp(k)))

}Softmax Using Loops :-

softmax_loops <- function(k){

testit::assert(is.numeric(k))

sum_exp <- sum(exp(k))

output <- rep(0,length(k))

for (i in seq_along(k)){

output[i] <- exp(k[i])/sum_exp

}

return(output)

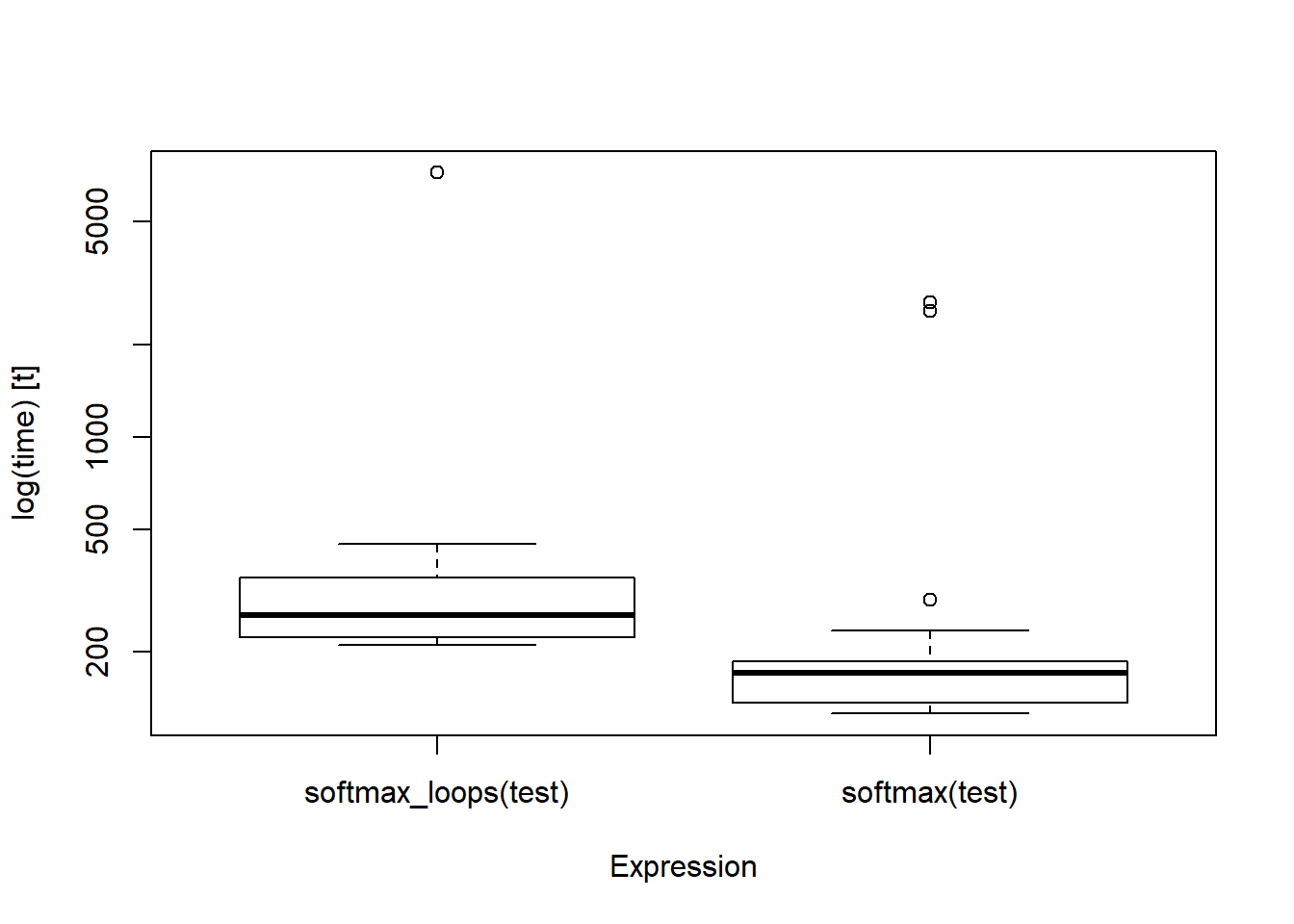

}Benchmarking

library(microbenchmark)

test <- rnorm(n = 1000) + (rnorm(n = 1000) * 2)

res <- microbenchmark::microbenchmark(softmax_loops(test), softmax(test), times = 100)

res# Unit: microseconds

# expr min lq mean median uq max

# softmax_loops(test) 209.558 221.8295 357.9284 262.4195 347.941 7226.899

# softmax(test) 125.735 136.6850 214.9951 170.1005 185.204 2735.574

# neval

# 100

# 100boxplot(res)

Benchmark result shows that using Vectorize form running softmax is much more efficient.